How power and scale – not intelligence – built the machines we now call smart.

In 2019, the global economy functioned without large language models. In 2025, they are marketed as essential infrastructure for business, governments, and education. These systems are massive pattern matchers that generate plausible text at extraordinary scale, but cannot reliably distinguish truth from falsehood, reason, or understand what they write (Bender et al., 2021; McCoy et al., 2023). Their dominance was not inevitable – it reflects the concentration of computational, data, and institutional resources in the hands of a few organisations (Whittaker, 2021; Crawford, 2021).

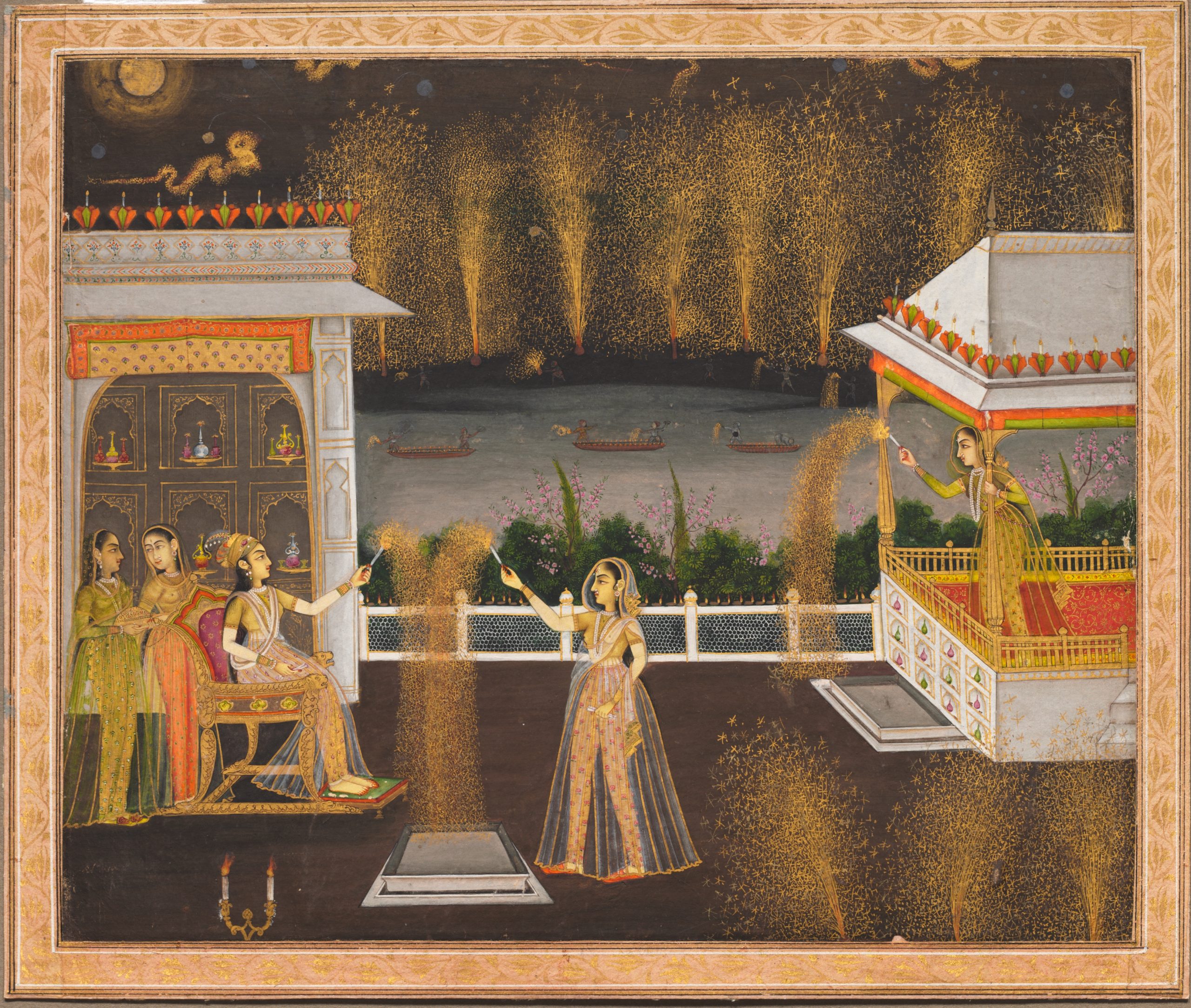

Royal Women Celebrating Diwali, circa 1760. Northern India, Uttar Pradesh, Gum tempera and gold on paper. Cleveland Museum of Art.

Royal Women Celebrating Diwali, circa 1760. Northern India, Uttar Pradesh, Gum tempera and gold on paper. Cleveland Museum of Art.

This is the second of three linked articles

- This is no AI bubble – here’s why.

- AI didn’t have to be this way – why we have the AI we do

- The uncomfortable truth about AGI hype.

This article looks at how control over material resources intersects with epistemic and institutional capture to ultimately determine why we have the type of ‘AI’ we have today. Drawing on historical examples, such as the industrial destruction of Dhaka muslin (Riello, 2013; Lemire, 2018), highlights how definitions of value and progress are constructed and materially enforced. Understanding these dynamics is essential for organisations navigating AI adoption, as the seeming inevitability of the current AI paradigm masks choices shaped by power, resources, and incentives.

1. Material capture

The contemporary AI paradigm emerged through a combination of algorithmic innovations but most importantly from unprecedented computational scale.

In 2012, AlexNet (Krizhevsky, Sutskever & Hinton, 2012) demonstrated that convolutional neural networks, when combined with GPU acceleration, novel activation functions (ReLU) and regularisation techniques (dropout), could dramatically outperform existing image classification techniques. The ImageNet victory revealed that sufficient compute and data could make neural networks work, and work well. Google’s subsequent hiring of key team members signalled industry recognition that competitive advantage would increasingly depend on computational infrastructure (Hooker, 2021), mapping a clear path for companies positioned to control these resources.

Emperor Jahangir Weighing Prince Khurram (later Shah Jahan), attributed to Manohar Das, c. 1615-1625. Ink, gouache, and gold on paper. British Museum, London.

Emperor Jahangir Weighing Prince Khurram (later Shah Jahan), attributed to Manohar Das, c. 1615-1625. Ink, gouache, and gold on paper. British Museum, London.

The transformer architecture (Vaswani et al., 2017) from Google combined algorithmic breakthroughs with fortuitous alignment with existing hardware. Transformers improved on fundamental problems in sequence modeling, particularly capturing long-range dependencies through self-attention (Pascanu, Mikolov & Bengio, 2013). The importance of the transformer to contemporary AI can not be understated – it is the T in ChatGPT (Generative Pretrained Transformer).

Transformers map exceptionally well onto parallel processing hardware, like the GPU, as the key, query and value matrices which underpin ‘attention’ can be parallelised through batch processing (Tillet, Kung & Cox, 2019). There has always been a tight relationship between software and hardware, but the transformer entrenched the GPU (and other parallel processors) as key bottlenecks in the race to scale. Alternative paradigms that cannot exploit this hardware or software (linear matrix operations) are therefore excluded from the existing ecosystem (e.g. spiking architectures, optical computing) (Hooker, 2021).

BERT (Devlin et al., 2018) demonstrated how transformers plus scale could achieve state-of-the-art performance across diverse language tasks. The full BERT-large (340M parameters) achieved an 80.5% average on the GLUE benchmark, a 7.3 percentage point improvement on previous approaches (Wang et al., 2018). While 340M parameters was mind-bogglingly huge in 2019, models have grown over 1000x larger in just six years.

The decisive discovery which underpinned the approach of scaling was that of Kaplan et al. (2020), who revealed a predictable relationship between model size, dataset size, compute budget, and performance. Hoffman et al.’s (2022) refinement emphasised the importance of data in this relationship. Essentially, scaling laws state that there is a predictable power law relationship between the three – better performance emerges from scaling compute and data together in specific ratios. Scaling laws provided a roadmap for those with the resources to exploit them.

Compute

Discovering scaling laws requires running experiments across multiple orders of magnitude, an extremely resource-intensive endeavour. Their discovery exemplifies how material capture has shaped scientific knowledge. Kaplan et al. (2020) from OpenAI trained models from 10^3 to 10^9 parameters, while Hoffmann et al.’s (2022) Chinchilla scaling law revision (from Google), emphasising data over parameters, required training over 400 models ranging from 70 million to 16 billion parameters. The Chinchilla-70B model itself required approximately 5.76×10^23 FLOPs to train – a computational investment far exceeding most research budgets. The scale of experimentation demands not just compute but distributed training frameworks, extensive hyperparameter sweeps, and repeated runs.

Lady Maria Conyngham. circa 1824-1825. Sir Thomas Lawrence (1769-1830). Oil on canvas. The Met.

Lady Maria Conyngham. circa 1824-1825. Sir Thomas Lawrence (1769-1830). Oil on canvas. The Met.

The implications extend beyond resource access. Organisations with sufficient compute can explore the parameter space to discover empirical regularities, while others must accept these findings without the ability to directly verify or extend them. When Berisoglu et al. (2024) attempted to replicate Hoffmann et al’s scaling laws, they had to reconstruct data from figures rather than published datasets, discovering methodological errors and implausibly narrow confidence intervals in the original work, illustrating how limited peer review becomes when few can verify claims.

This creates a form of dependency where the scientific community’s understanding of what works at scale is mediated by the few capable of running such experiments. That even DeepMind’s scaling law re-estimation contained significant errors underscores how limited peer review becomes when few possess the resources to verify claims.

The extreme private GPU infrastructure investment currently underway further intensifies concentration of compute (Miller, 2022). This further entrenches an epistemic asymmetry; the ability to discover what works at scale becomes restricted to those with scale.

Data

Platforms possess a twofold advantage in data, namely volume and the engineering capacity to process it at scale. While much training data is nominally ‘public’ – Common Crawl, Wikipedia, GitHub – transforming petabytes of raw web scrapes into usable training sets requires specialised infrastructure and human capital concentrated in few organisations (Bender et al., 2021; Dodge et al., 2021). Google’s C4 dataset, derived from Common Crawl, exemplifies this – creating it required deduplication across 750GB of compressed text, removing toxic content, and filtering for quality (Raffel et al., 2020). The distributed computing infrastructure and engineering expertise required took decades to develop at companies like Google (Dean, 2020). The volume advantage stems from exclusive access to humanity’s digital behaviours, central to these companies’ identities (Zuboff, 2019).

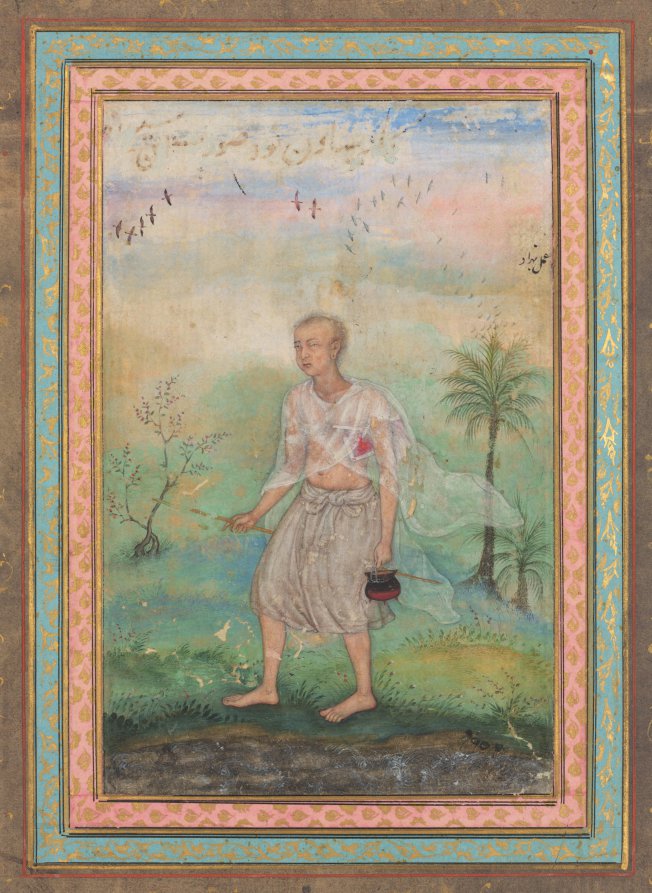

Jain Ascetic Walking Along a Riverbank, c. 1600, Basavana (Indian, active c. 1560–1600).Gum tempera, ink, and gold on paper Severance and Greta Millikin Collection, The Cleveland Museum of Art.

Jain Ascetic Walking Along a Riverbank, c. 1600, Basavana (Indian, active c. 1560–1600).Gum tempera, ink, and gold on paper Severance and Greta Millikin Collection, The Cleveland Museum of Art.

This concentration enables the current paradigm of unsupervised learning on massive text corpora. Unsupervised learning made large-scale training feasible, as annotation at the scale of billions of tokens (a term referring to words or sub-words in the dataset). But this feasibility depends on having enough quality data, and processing power. While the Pile (Gao et al., 2020) or RedPajama (Together Computer, 2023) datasets attempt to democratise access, they cannot match the proprietary data mixtures and processing pipelines of major labs (Luccioni & Viviano, 2021). Even when starting from the same Common Crawl base, outputs can differ dramatically based on processing capabilities (Bender et al., 2021; Dodge et al., 2021).

Perhaps most significantly, large platforms operate with de facto legal impunity regarding copyright that smaller actors cannot assume. The contrast is stark: Aaron Swartz faced 35 years in federal prison and ultimately took his own life while under prosecution for downloading academic articles from JSTOR (Lessig, 2013). Meanwhile Google Books digitised 25 million copyrighted books and settled for $125 million (Google Inc. et al., v. Authors Guild, 2005-2011), which, given their rent-derived revenues, does not amount to a serious deterrent. Meta’s own internal communications, revealed in litigation, explicitly acknowledge using torrented books to train models (Silverman v. Meta Platforms, 2023; Guardian, 2025). OpenAI faces multiple lawsuits over training data (New York Times v. Microsoft Corporation et al., 2023; Tremblay v. OpenAI, 2023). As of October 2025, there are currently 54 copyright suits against AI companies (Chat GPT is Eating the World, 2025).

While AI companies have previously invoked fair use successfully in copyright litigation, the Bartz v. Anthropic class action presents a new legal threat. In September 2025, Judge William Alsup granted preliminary approval for a $1.5 billion class action settlement, the largest copyright settlement in U.S. history, in a case alleging that Anthropic used authors’ works without permission to train AI models (Levy, 2025; Chat GPT Is Eating the World, 2025). Unlike individual lawsuits, class actions create collective claims that may not be subject to the same fair use defenses, meaning that even AI companies with strong fair use arguments can face substantial liability. This settlement sets a benchmark for other AI copyright litigation, including proposed class actions against OpenAI, and highlights the differential access to high-quality training data, as academic researchers, public bodies, or startups cannot risk similar legal exposure. The difference is not just resources but institutional power – the ability to transform potential copyright violation into accepted innovation through market position and legal departments that can weather years of litigation.

Infrastructure

The software infrastructure of our contemporary AI – ‘the water AI swims in’ (Whittaker, 2021) – creates path dependencies that extend far beyond simple resource access. The large majority of AI research uses PyTorch or TensorFlow (PyTorch, 2024 – more than 70% of AI research uses PyTorch), frameworks optimised for specific hardware; PyTorch for NVIDIA GPUs, TensorFlow for Google TPUs (Paszke et al., 2019; Abadi et al., 2016). Even independent tools like vLLM (Kwon et al., 2023) and Triton (Tillet et al., 2019) require NVIDIA or Google hardware. This creates a compounding architectural lock-in; researchers develop for available hardware/tooling, which shapes architectural attention, which drives future hardware development.

Alternative paradigms – neuromorphic computing, optical processors, or non-transformer architectures – face not merely hardware disadvantages but entire missing ecosystems; no optimised compilers, debuggers, profiling tools, or develop expertise. Tensors, attention mechanisms, and batched operations are all shaped by GPU capabilities.

Only China, through state-coordinated efforts involving Baidu’s Kunlun chips, Alibaba’s Hanguang processors, and Huawei’s Ascend series, has attempted to escape this dependency. Driven by U.S. chip export controls and backed by hundreds of billions in state investment, China demonstrates what breaking infrastructural dependency actually requires, namely wholesale ecosystem reconstruction at nation-state scale. That only geopolitical necessity with massive state backing has produced a credible alternative underscores how entrenched this infrastructure dominance has become.

Platforms controlling base layers shape what can be built above, creating technological dependency where innovation must follow paths predetermined by infrastructure owners.

2. Epistemic capture

Yet control over the resources required to scale up large language models is only part of the picture. Material capture alone doesn’t explain why scale is treated as synonymous with progress. What has also happened is that how we measure success and progress in the ‘pursuit of intelligence’, or how we measure the value of LLMs, has been redefined in the way that best benefits those who can scale.

GLUE

GLUE (General Language Understanding Evaluation) and its successor SuperGLUE exemplify this capture (Wang et al., 2018). By aggregating diverse natural language tasks – sentiment analysis, paraphrase detection, natural language inference – into a single leaderboard score, these benchmarks converted multiple research goals into a single competitive axis. This axis was particularly amenable to scalable transformers trained on massive text corpora, thus amplifying the advantage of actors who already had the data, compute, and institutional leverage to exploit scale.

The benchmarks explicitly prioritised ‘transfer learning’ – capabilities that emerge from massive pretraining – over domain expertise or efficiency. A model excelling at specific tasks but struggling on others would score poorly overall, incentivising generalist approaches which require massive corpora and compute.

A woman in Blue (Portrait of the Duchess of Beaufort). circa 1775-1780. Thomas Gainsborough (1727-1788). Oil on canvas. Hermitage Museum.

A woman in Blue (Portrait of the Duchess of Beaufort). circa 1775-1780. Thomas Gainsborough (1727-1788). Oil on canvas. Hermitage Museum.

BERT (Devlin et al., 2018) achieved a 7-point GLUE improvement through massive pretraining, validating an approach that would subsequently become the paradigm. GLUE rewarded transfer learning, transfer learning rewarded scale – and scale determines winners.

A growing literature documents how leaderboards shape priorities badly (Ethayarajh & Jurafsky, 2020; Raji et al., 2021; Alzahrani et al., 2024) – leaderboards not neutral instruments, rather socio-technical instruments that canalise research towards what is measurable and fundable. By privileging tasks that scaled with compute and data, GLUE materially channeled the community’s attention toward exploiting scalable text-based pretraining. Alternative metrics, such as efficiency, interpretability, fairness, truthfulness, all became secondary. Saturations of these benchmarks quickly followed, and evaluation shifted to less rigorous metrics, such as preference-based rankings (LMSYS Arena), private unverifiable benchmarks, and generation fluency.

Institutional capture

The Honourable Miss Caroline Fox (1767-1845), 1810. James Northcote (1746-1831). Oil on canvas. Royal Albert Memorial Museum.

The Honourable Miss Caroline Fox (1767-1845), 1810. James Northcote (1746-1831). Oil on canvas. Royal Albert Memorial Museum.

These redefined metrics gain force through institutional arrangements. Academia’s reliance on tech resources extends beyond compute access, to fundamental questions of what research is possible, fundable, and legitimate (Birhane, 2021; Crawford, 2021).

A survey of EMNLP 2022 papers (Aitkens et al., 2024) found that while only 13.3% of ACL Anthology papers had industry affiliation, over three times that proportion of critical citations – datasets, pretrained models, prior best results – came from industry. Moreover, 87% of papers used at least one pretrained model, nearly all produced by industry, making industry components a de facto requirement for publication in top venues.

A combination of industry-sponsored Ph.D programs and dual affiliation arrangements, where professors draw tech salaries while publishing under university banners, both bring together the incentives of tech companies and academic pursuits (Whittaker, 2021). Stanford’s Center for Research on Foundation Models (SCRF), which received initial funding from Google, Meta, and Amazon, exemplifies how this capture shapes knowledge production. In 2021, the SCRF published a report rebranding ‘large language models’ (among the most compute-intensive and industry-dependent techniques) as ‘foundation models’, framing them as a ‘paradigm shift’ worthy of a new research centre (Bommasani et al., 2021). This rebranding distances LLMs from sustained criticism about bias, environmental costs, and concentrated power while presenting industry-captured techniques as scientific progress.

As Meredith Whittaker documents, when Google instructs researchers to “strike a positive tone,” when it fires Timnit Gebru for critiquing LLMs central to product roadmaps, when Meta revokes data access from NYU researchers examining January 6th – these aren’t isolated incidents but rather boundary-setting around acceptable knowledge (Hao, 2020; Google, 2023). The combination creates a system where the easiest path to research success runs through questions and methods that favour the incentives of concentrated power.

Muslin

The redefinition of value to serve concentrated power is not unique to AI. A persistent legacy of industrialisation was the conversion of qualitative value into quantitative standards that meet industrial requirements.

The Manchester Cotton Exchange’s classification system (1870s-1890s) demonstrates how industrial capacity redefines value itself. The Exchange created global standards measuring precisely what only industrial mills could optimise: staple length, tensile strength, uniformity, and thread count, at scale. Properties that gave handwoven textiles their superiority, like breathability, drape, regional character, had no classification codes, no market prices, no existence in the system of value. This wasn’t neutral value measurement, but rather definition of quality as only what machines could produce.

These global standards were enforced as part of a colonial regime, through which direct economic and physical coercive forces channelled power back into British industrial mills. The redefinition of value functioned as a component of the colonial monopoly on textile manufacture – both manifesting in the destruction of Dhaka muslin.

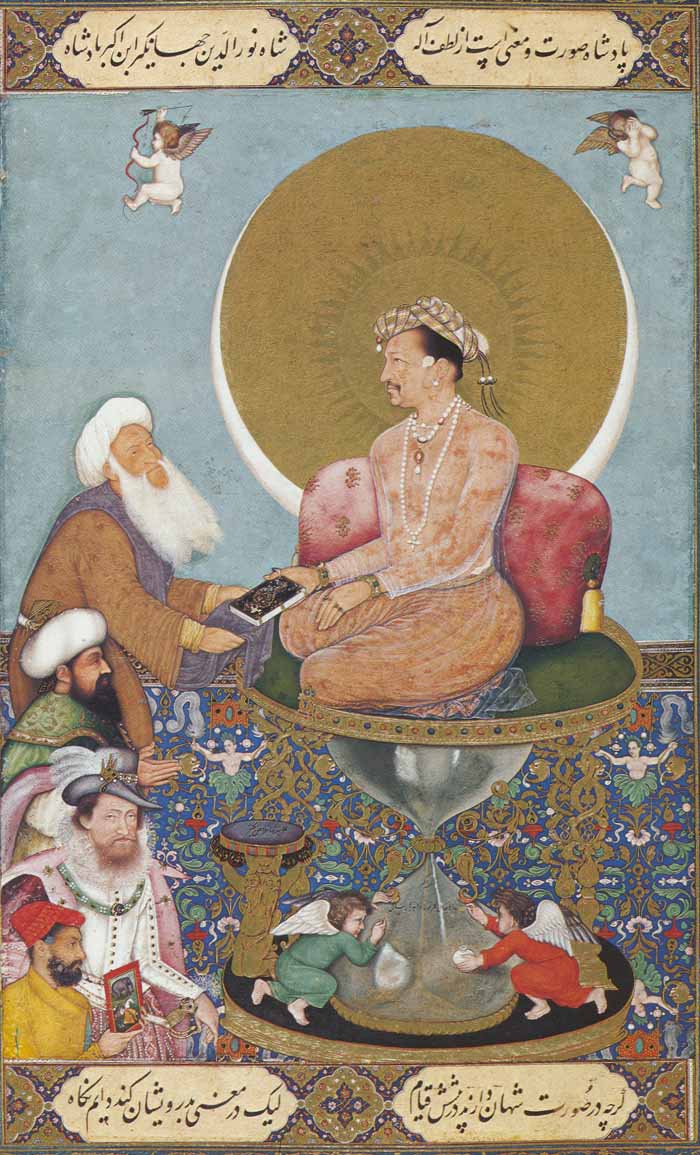

Jahangir Preferring a Sufi Shaikh to Kings, Bichitr, c. 1620. Ink, gold and watercolor on paper. Freer Gallery of Art, Smithsonian Institution.

Jahangir Preferring a Sufi Shaikh to Kings, Bichitr, c. 1620. Ink, gold and watercolor on paper. Freer Gallery of Art, Smithsonian Institution.

Dhaka muslin was a fabric produced for millennia in Bengal. The fabric was valued for its translucence, durability and texture – so fine that a bolt could pass through a wedding ring (Sulaiman, 9th century), and the cloth was described by Xuanzang in the seventh century as ‘like the light vapours of the dawn’ (Beal, 1884). Petronius (1st century) commented ‘thy bride might as well clothe herself with a garment of the wind as stand forth publicly naked under her clouds of muslin’.

The cloth reached its peak during the period of the Mughal empire, spanning the early sixteenth till the eighteenth century. Within Mughal court culture, muslin held a significance beyond luxury, signifying both moral and artistic refinement. Its circulation expressed Mughal imperial power through systems of ceremonial patronage and gift exchange. The finest pieces (termed mulmul khas) were reserved for imperial use, featured in miniature paintings as markers of rank and refinement, and formed a crucial part of the khilat system of honorific robes that structured political relationships.While the cloth had been prized globally by elites for millennia, it became increasingly popular in European fashion by the late 18th century. It was the luxury textile of its era, worn by Marie Antoinette and Joséphine Bonaparte, essential to the neoclassical aesthetic that dominated elite fashion (Riello, 2013).

The British East India Company gradually expanded control over Bengal from the 1750s, formalising rule after the Battle of Plassey (1757) and gaining revenue collection rights in 1765. This gave them control over the source of Europe’s most coveted fabric. By 1793, the Permanent Settlement restructured land ownership, but Mughal authority persisted nominally until 1857, when direct British Crown rule began following the Indian Rebellion.

Throughout this period of control expansion, the EIC systematically interfered with muslin production. They pushed weavers into debt while demanding higher volumes at lower prices. British colonialists codified the production process, and began producing inferior imitations in Lancashire industrial mills. By the late 19th century, aided by the introduction of the Cotton Exchange standards, as well as tariffs, duties and taxes imposed throughout decades of expanding British control, British cotton imitations had flooded not only European markets but Indian ones as well.

The raw material required, a variety of cotton called phuti karpas, thrived only in a specific microclimate. The sixteen step manufacturing process, distributed across sixteen separate villages around Dhaka, has now been lost – and the plant itself is now extinct (Islam, 2016). Modern attempts to recreate the fabric have failed, achieving a thread count of around 300 where the original reached thread counts of 1200 – surpassing modern day luxury fabrics (Islam, 2016). Modern muslins have thread counts between 40 and 80.

The British textile industry’s ability to impose mechanical standards depended on the colonial domination of Bengal and the subordination of local economies to imperial trade policy – the redefinition of quality in textiles was inseparable from military conquest, taxation regimes, and the dismantling of artisanal production in Dhaka and beyond (Ray, 1973).

The Artist and her Mother, 1816. Rolinda Sharples. Oil on panel.

The Artist and her Mother, 1816. Rolinda Sharples. Oil on panel.

The Dhaka muslin analogy is in this sense imperfect. Colonial suppression of muslin involved direct economic and physical coercion. Yet the differences demonstrate how value redefinition is contingent on the specific power structures that enable it, and serves that power, illuminating how industrial and digital actors redefine value to fit their means of production, and how epistemic traditions can be lost when their modes of production become structurally unviable.

The destruction of Dhaka muslin through the extinction of phuti karpas and the loss of Dhaka’s distributed manufacturing knowledge demonstrates how measurement systems function as mechanisms of power concentration within particular structural arrangements. Cotton standards that favoured scale were not discovered but constructed, created by and for industrial mills, then enforced through colonial power.

Understanding AI requires examining how standards emerge from and reinforce contemporary power configurations. When we accept that intelligence equates to scaled pattern matching, that progress means larger models, that success means beating benchmarks designed by those who can afford to beat them, we participate in a redefinition that serves specific interests while appearing as a neutral technical instrument. Value systems emerge from particular configurations of power and resources, serve those who create them, and reshape entire fields of possibility through definitional exclusion.

The historical parallel reveals why the current AI paradigm’s seeming inevitability masks a degree of contingency. Understanding the processes of how measurement becomes prescription, how technical standards encode economic power, how alternative paradigms become literally invaluable, is essential for navigating AI’s current moment. Without this historical consciousness, the current AI paradigm appears inevitable rather than contingent, its metrics objective rather than constructed, its trajectory fixed rather than chosen by those with the resources to choose. Like muslin’s breathability and drape, qualities such as interpretability, efficiency, or domain expertise have no existence in a system that values scale.

Conclusion

Allegorical Representation of Emperor Jahangir and Shah Abbas, Abu’l Hasan, c. 1618. From the St. Petersburg Album. Freer and Sackler Gallery, Smithsonian Institution.

Allegorical Representation of Emperor Jahangir and Shah Abbas, Abu’l Hasan, c. 1618. From the St. Petersburg Album. Freer and Sackler Gallery, Smithsonian Institution.

GLUE did not solely measure progress in natural language understanding, but also reified the trajectory that AI development had long been on – scale as the solution – already taking place within Big Tech’s infrastructural and epistemic enclosure. By privileging tasks that scaled predictably with compute and data, it validated an approach where ‘more’ became synonymous with ‘better’. Decoder-only models (which our current language models are) could be scaled more efficiently, trained with simpler objectives (next-token prediction) and produce outputs that feel intelligent even when lacking understanding.

This critique does not dismiss genuine utility of transformer or similar architectures within constrained domains – machine translation with parallel corpora, protein structure prediction, code completion, semantic search, or radiography for example. In these contexts, with clear evaluation criteria and verifiable outputs, transformers demonstrate real value – albeit often within the concentrated infrastructure and data regimes discussed. But repackaging of these narrow successes into general-purpose ‘intelligence’ represents precisely the kind of value redefinition discussed above. Rather than tools for specific tasks, we are marketed essential infrastructure for everything, through systems that cannot reliably distinguish truth from falsehood – decoder-based language models, optimised for next-token prediction of the web rather than understanding, can produce outputs that feel intelligent, and this surface plausibility fuels their marketing as universal infrastructure.

For organisations navigating AI adoption, understanding these dynamics is essential for strategic decision-making. The concentration of AI infrastructure in Big Tech hands means companies risk essentially renting access to capabilities they cannot own or control, creating permanent dependencies rather than competitive advantages. Tangible evidence of dependency risk comes from the recent AWS outage – when Amazon’s cloud services experienced problems, over 2,000 companies worldwide were affected simultaneously, from banking to communications, demonstrating how critical infrastructure failures can cascade across entire sectors (The Guardian, 2025).

The MIT/BCG studies showing limited ROI on AI investments should not be treated as anomalies, but as potentially pointing to this structural misalignment – current AI systems are optimised for Big Tech’s economies of scale and data aggregation, not necessarily for the diverse and situated needs of smaller enterprises or public institutions.

Individuals and organisations should therefore ask not only whether AI ‘works’, but for whom it works. They should critically evaluate whether they’re solving real problems or buying into a manufactured necessity, and consider alternatives such as domain-specific models, open-source ecosystems, and smaller-scale architectures that preserve autonomy, transparency, and contextual alignment.

History reminds us to be aware that value should be interrogated, as it can serve as more than measurement of progress.

Abdalla, M., Wahle, J. P., Ruas, T. L., Névéol, A., Ducel, F., Mohammad, S., & Fort, K. (2023). The elephant in the room: Analyzing the presence of big tech in natural language processing research. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, 13141-13160.

Aitken, W., Abdalla, M., Rudie, K., & Stinson, C. (2024). Collaboration or corporate capture? Quantifying NLP’s reliance on industry artifacts and contributions. Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, 3433-3448.

Alzahrani, N., Alyahya, H. A., Alnumay, Y., Alrashed, S., Alsubaie, S., Almushaykeh, Y., Mirza, F., Alotaibi, N., Altwairesh, N., Alowisheq, A., Bari, M. S., Khan, H. (2024). When Benchmarks are Targets: Revealing the Sensitivity of Large Language Model Leaderboards. arXiv:2402.01781

Beal, S. (1884). Si-Yu-Ki: Buddhist records of the Western world. London: Trübner & Co.

Bender, E. M., Gebru, T., McMillan-Major, A. and Shmitchell, S. (2021) On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT ’21).

Berisoglu, T, Erdil, E., Barnett, M. & You, J. (2024) Chinchilla Scaling: A replication attempt. arXiv:2404.10102

Birhane, A., Prabhu, V., Kahembwe, E. (2021) Multimodal datasets: misogyny, pornography, and malignant stereotypes. arXiv:2110.01963

Bommasani, R., Hudson, D. A., Adeli, E., et al. (2021) On the Opportunities and Risks of Foundation Models. Center for Research on Foundation Models (CRFM), Stanford Institute for Human-Centered Artificial Intelligence (HAI).

Crawford, K. (2021) Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press.

Dean, J. (2020) The Deep Learning Revolution and Its Implications for Computer Architecture. Communications of the ACM, 63(7), 25–31.

Devlin, J., Chang, M.-W., Lee, K. and Toutanova, K. (2019) BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. NAACL-HLT.

Dodge, J., Sap, M., Marasović, A., Agnew, W., Ilharco, G., Groeneveld, D., Mitchell, M., and Gardner, M. (2021) Documenting Large Webtext Corpora: A Case Study on the Colossal Clean Crawled Corpus arXiv:2104.08758.

Ethayarajh, K., & Jurafsky, D. (2020). Utility is in the eye of the user: A critique of NLP leaderboards. Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, 4846-4853.

Gao, L., Biderman, S., Black, S. et al. (2020) The Pile: An 800GB Dataset of Diverse Text for Language Modeling. arXiv:2101.00027.

Google Inc. et al. v. Authors Guild (2005–2015) U.S. District Court, Southern District of New York.

Hao, K. (2020). We read the paper that forced Timnit Gebru out of Google. Here’s what it says. MIT Technology Review.

Hoffmann, J., Borgeaud, S., Mensch, A. et al. (2022) Training Compute-Optimal Large Language Models. arXiv:2203.15556.

Hooker, S. (2021) Moving Beyond “Algorithmic Bias Is a Data Problem.” Patterns, 2(4).

Islam, S. (2016). Our Story of Dhaka Muslin. AramcoWorld.

Kaplan, J., McCandlish, S., Henighan, T. et al. (2020) Scaling Laws for Neural Language Models. arXiv:2001.08361.

Krizhevsky, A., Sutskever, I. and Hinton, G. E. (2012) ImageNet Classification with Deep Convolutional Neural Networks. Advances in Neural Information Processing Systems (NeurIPS).

Riello, G. (2013) Cotton: The Fabric that Made the Modern World. CUP.

Lessig, L. (2013) Aaron’s Law: Law and Justice in a Digital Age. Harvard Law Review, 126(2), 1–12.

Luccioni, A. S. and Viviano, J. (2021) What’s in the Box? A Preliminary Analysis of Undesirable Content in the Common Crawl Corpus. arXiv:2105.02732

Miller, C. (2022) Chip War: The Fight for the World’s Most Critical Technology. Simon & Schuster.

New York Times Co. v. Microsoft Corporation et al, United States District Court for the Southern District of New York

Pascanu, R., Mikolov, T. and Bengio, Y. (2013) On the Difficulty of Training Recurrent Neural Networks. International Conference on Machine Learning (ICML).

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., and Sutskever, I. (2019) Language Models are Unsupervised Multitask Learners. OpenAI Technical Report.

Raffel, C., Shazeer, N., Roberts, A., et al. (2020) Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. Journal of Machine Learning Research, 21(140), 1–67.

Raji, I. D., Denton, E., Bender, E. M., Hanna, A., & Paullada, A. (2021). AI and the everything in the whole wide world benchmark. Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks.

Ray, I. (1973). The crisis of Bengal agriculture. The Indian Economic and Social History Review, vol. 10(3), pp. 244-279.

Riello, G. (2013) Cotton: The Fabric that Made the Modern World. Cambridge University Press.

The Guardian (22 May 2023) ‘There was all sorts of toxic behaviour’: Timnit Gebru on her sacking by Google, AI’s dangers and big tech’s biases”: https://www.theguardian.com/lifeandstyle/2023/may/22/there-was-all-sorts-of-toxic-behaviour-timnit-gebru-on-her-sacking-by-google-ais-dangers-and-big-techs-biases#:~:text=Google%20says%20she%20resigned%3B%20Gebru,to%20get%20in%20your%20way.

The Guardian (10 January 2025) ‘Zuckerberg approved Meta’s use of ‘pirated’ books to train AI models, authors claim’: https://www.theguardian.com/technology/2025/jan/10/mark-zuckerberg-meta-books-ai-models-sarah-silverman

The Guardian (26 June 2025) ‘Meta wins AI copyright lawsuit as US judge rules against authors’: https://www.theguardian.com/technology/2025/jun/26/meta-wins-ai-copyright-lawsuit-as-us-judge-rules-against-authors

The Guardian (20 October 2025) ‘Amazon Web Services outage shows internet users ‘at mercy’ of too few providers, experts say’: https://www.theguardian.com/technology/2025/oct/20/amazon-web-services-aws-outage-hits-dozens-websites-apps

Tillet, P., Kung, H. and Cox, D. (2019) Triton: An Intermediate Language and Compiler for Tiled Neural Network Computations. PPoPP ’19.

Together Computer (2023) RedPajama: Open Dataset for Training Large Language Models. Hugging Face Release Notes.

Vaswani, A., Shazeer, N., Parmar, N. et al. (2017) Attention Is All You Need. Advances in Neural Information Processing Systems (NeurIPS).

Wang, A., Singh, A., Michael, J., Hill, F., Levy, O. and Bowman, S. R. (2018) GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding. ICLR.

Whittaker, M. (2021) The Steep Cost of Capture: How Big Tech Dominates AI Research. AI Now Institute.

Zuboff, S. (2019) The Age of Surveillance Capitalism. Profile Books.